"Can I top up the Claude developer platform?" is a question many domestic developers often ask. The answer is: Topping up is not recommended; there are better alternatives. This article will analyze the limitations of the official Claude platform in detail and introduce 3 superior ways to use the Claude API.

Core Value: By reading this article, you'll understand the risks associated with Claude API top-up services, master safer and more stable API calling methods, and learn how to use the Prompt Caching feature to lower your costs.

Why Official Claude API Topping Up Isn't Recommended

Many developers want to use the official Claude developer platform (console.anthropic.com) by topping up through third parties, but this approach comes with multiple risks and limitations.

| Issue Type | Specific Manifestation | Impact Level |

|---|---|---|

| Payment Restrictions | Only international credit cards (Visa/Mastercard) are supported | High |

| Account Risk | Topped-up accounts are prone to being banned | Extremely High |

| Severe Rate Limiting | New Tier 1 accounts have very low limits | High |

| Network Issues | Unstable direct connections from within China | Medium |

| Cost Issues | High premiums + foreign exchange fees | Medium |

Official Claude Platform Payment Method Restrictions

According to Anthropic's official documentation, the Claude API uses a prepaid credits model:

- Supported Payment Methods: Visa, Mastercard, American Express, Discover

- Transaction Currency: USD only

- Credit Validity: Valid for 1 year after purchase, non-extendable

- Refund Policy: All top-ups are non-refundable

🚨 Risk Alert: Topping up essentially means using someone else's account or payment channel. Anthropic explicitly prohibits account sharing and resale. Once any anomaly is detected, the account will be banned immediately, and all topped-up credits will be lost.

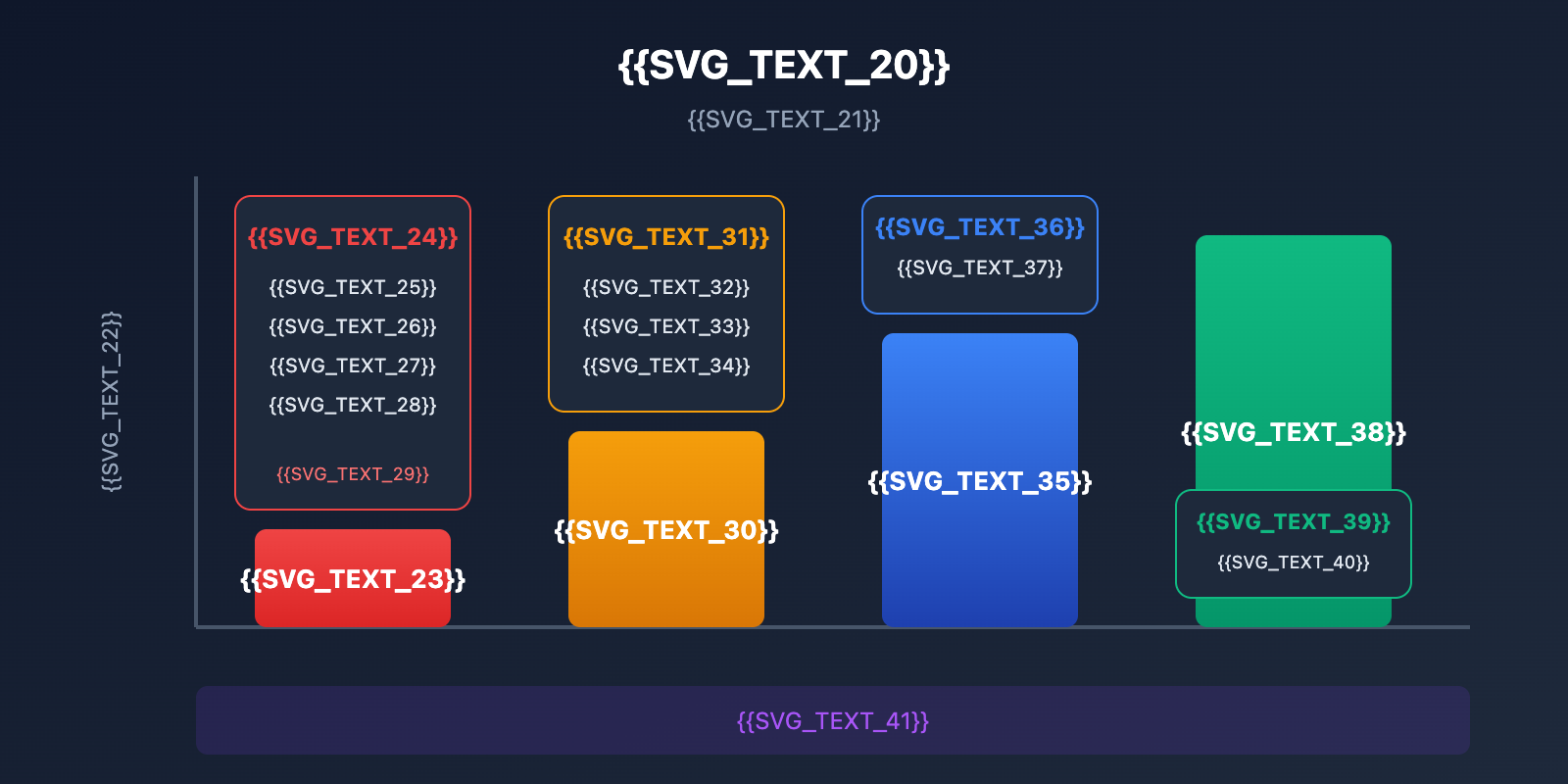

Official Claude API Rate Limiting Mechanism

The Claude API uses a tiered rate-limiting mechanism (Usage Tiers). New accounts start at Tier 1:

| Tier | Top-up Requirement | RPM Limit | TPM Limit | Monthly Spending Limit |

|---|---|---|---|---|

| Tier 1 | $5+ | 50 | 40K | $100 |

| Tier 2 | $40+ | 1,000 | 80K | $500 |

| Tier 3 | $200+ | 2,000 | 160K | $1,000 |

| Tier 4 | $400+ | 4,000 | 400K | $5,000 |

The Problem: Even if topping up is successful, new accounts face strict rate limits that often fail to meet normal development needs. Upgrading to higher tiers requires consistent spending over several months, which carries a very high time cost.

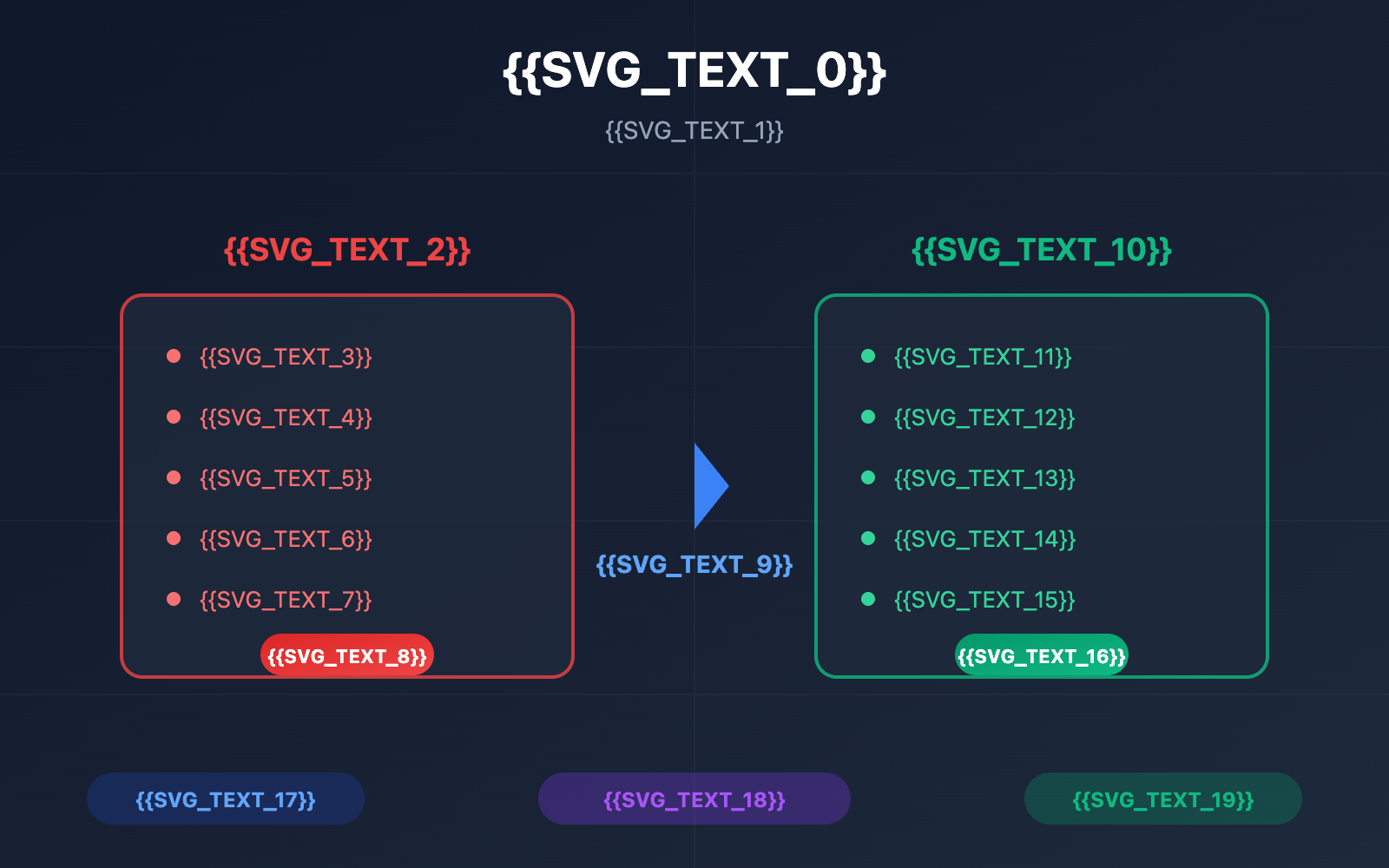

Comparison of 3 Claude API Alternatives

To meet the actual needs of developers in China, we recommend the following three alternatives:

| Solution | Pros | Cons | Recommended Scenarios |

|---|---|---|---|

| API Proxy Service | No credit card needed, high quotas, supports caching | Requires choosing a reliable provider | Individual developers, SMB teams |

| AWS Bedrock | Enterprise-grade stability, compliant | Complex configuration, higher costs | Large enterprises |

| Google Vertex AI | GCP ecosystem integration | Requires a GCP account | Teams already using GCP |

Solution 1: API Proxy Services (Recommended)

API proxy services are currently the best choice for developers in China. They allow you to call the Claude API through compliant relay nodes without dealing with payment or network headaches.

Core Advantages:

- Easy Payment: Supports domestic payment methods like Alipay and WeChat.

- No Rate Limit Issues: Proxy providers have completed Tier upgrades, offering shared high quotas.

- Stable Network: Optimized routing for domestic access.

- Full Feature Support: Supports all features, including Prompt Caching and Extended Thinking.

🎯 Technical Tip: We recommend using the APIYI (apiyi.com) platform to call the Claude API. It provides an OpenAI-compatible interface and supports the full Claude model family, including the latest Prompt Caching feature.

Quick Integration Example:

import openai

client = openai.OpenAI(

api_key="sk-your-apiyi-key",

base_url="https://api.apiyi.com/v1" # APIYI 统一接口

)

response = client.chat.completions.create(

model="claude-sonnet-4-20250514",

messages=[

{"role": "user", "content": "Hello, Claude!"}

]

)

print(response.choices[0].message.content)

Solution 2: AWS Bedrock

Amazon Bedrock is a managed AI service from AWS that supports the full Claude model series.

Best For:

- Enterprises with existing AWS infrastructure.

- Projects with strict compliance requirements.

- Scenarios requiring deep integration with other AWS services.

Things to Note:

- Requires an AWS account and complex configuration.

- Pricing is the same as Anthropic's official rates.

- There can be a slight delay in the release of some new features.

Solution 3: Google Vertex AI

Google Cloud's Vertex AI also provides access to Claude models.

Best For:

- Teams already embedded in the GCP ecosystem.

- Projects requiring integration with Google Cloud services.

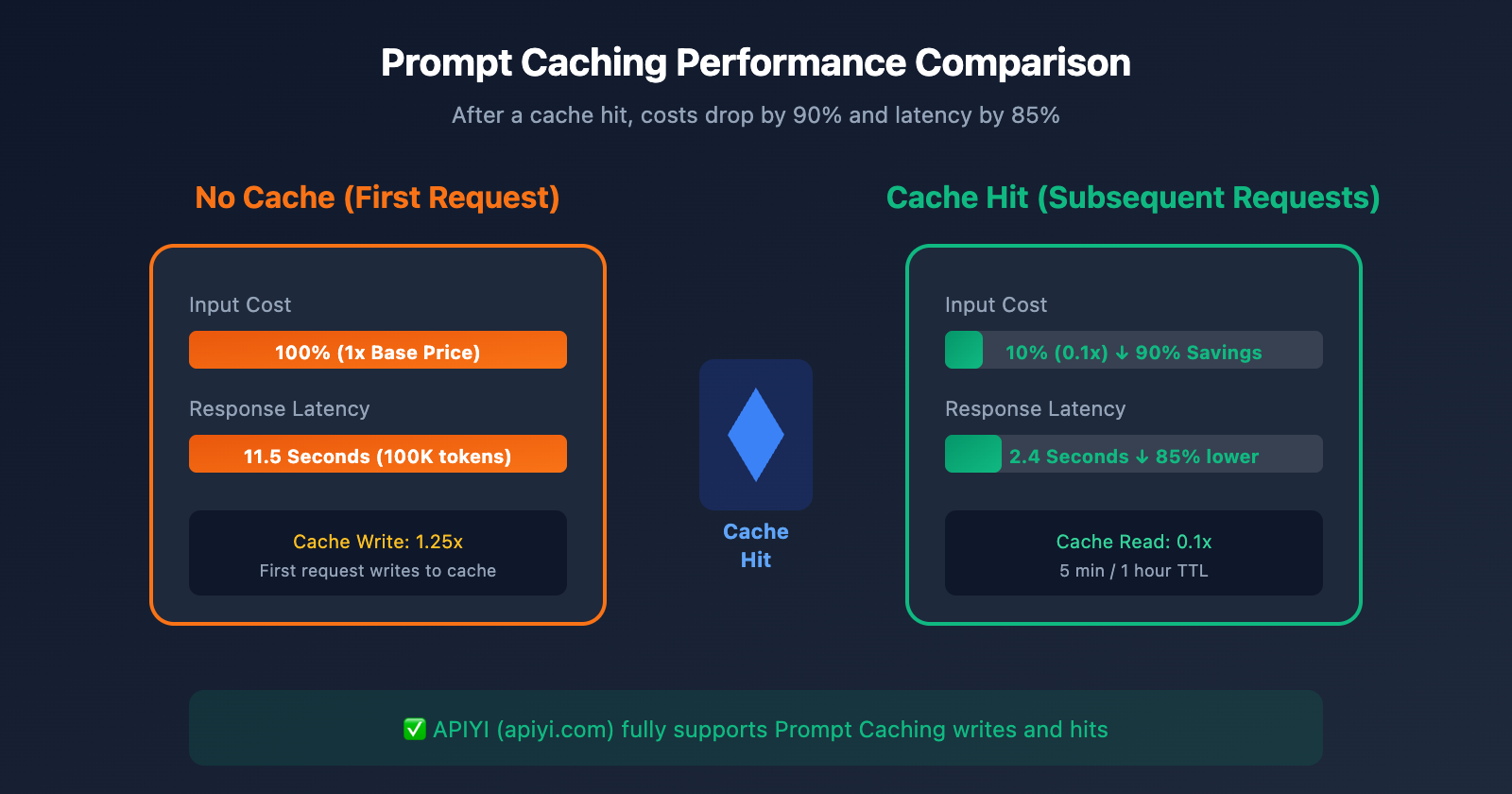

Deep Dive into Claude API Prompt Caching

Prompt Caching is a key feature of the Claude API that significantly reduces costs and latency. When choosing an alternative, it's crucial to ensure it supports this feature.

Core Principles of Prompt Caching

| Concept | Explanation |

|---|---|

| Cache Write | The first request writes the prompt content into the cache. |

| Cache Hit | Subsequent requests reuse the cache, slashing costs. |

| TTL | 5 minutes (default) or 1 hour (extended). |

| Min Tokens | At least 1,024 tokens are required to enable caching. |

Prompt Caching Price Comparison

| Operation Type | Price Multiplier | Description |

|---|---|---|

| Regular Input | 1x | Base price |

| Cache Write (5 min) | 1.25x | Slightly more expensive for the first write |

| Cache Write (1 hour) | 2x | Extended cache duration |

| Cache Read | 0.1x | Save 90% in costs |

Performance Boost: Official Anthropic data shows that with Prompt Caching:

- Latency drops by up to 85% (100K token scenario: 11.5s → 2.4s)

- Costs drop by up to 90%

Code Example Using Prompt Caching

import openai

client = openai.OpenAI(

api_key="sk-your-apiyi-key",

base_url="https://api.apiyi.com/v1" # APIYI 支持缓存功能

)

# 系统提示词(会被缓存)

system_prompt = """

你是一个专业的技术文档助手。以下是产品文档内容:

[此处放置大量文档内容,建议 1024+ tokens]

...

"""

response = client.chat.completions.create(

model="claude-sonnet-4-20250514",

messages=[

{

"role": "system",

"content": system_prompt,

# 标记缓存断点

"cache_control": {"type": "ephemeral"}

},

{"role": "user", "content": "请总结文档的核心功能"}

]

)

# 检查缓存状态

usage = response.usage

print(f"缓存写入: {usage.cache_creation_input_tokens} tokens")

print(f"缓存命中: {usage.cache_read_input_tokens} tokens")

View full caching configuration example

import openai

from typing import List, Dict

class ClaudeCacheClient:

def __init__(self, api_key: str):

self.client = openai.OpenAI(

api_key=api_key,

base_url="https://api.apiyi.com/v1"

)

self.system_cache = None

def set_system_prompt(self, content: str):

"""设置带缓存的系统提示词"""

self.system_cache = {

"role": "system",

"content": content,

"cache_control": {"type": "ephemeral"}

}

def chat(self, user_message: str) -> str:

"""发送消息,自动复用缓存"""

messages = []

if self.system_cache:

messages.append(self.system_cache)

messages.append({"role": "user", "content": user_message})

response = self.client.chat.completions.create(

model="claude-sonnet-4-20250514",

messages=messages

)

# 打印缓存统计

usage = response.usage

if hasattr(usage, 'cache_read_input_tokens'):

hit_rate = usage.cache_read_input_tokens / (

usage.cache_read_input_tokens +

usage.cache_creation_input_tokens + 1

) * 100

print(f"缓存命中率: {hit_rate:.1f}%")

return response.choices[0].message.content

# 使用示例

client = ClaudeCacheClient("sk-your-apiyi-key")

client.set_system_prompt("你是技术文档助手..." * 100) # 长文本

# 多次调用会复用缓存

print(client.chat("问题1"))

print(client.chat("问题2")) # 缓存命中,成本降低 90%

💡 Recommendation: The APIYI (apiyi.com) platform fully supports Claude Prompt Caching, including cache writes and hits. No extra configuration is needed—just use the standard interface to start optimizing your costs and performance.

Quick Start Guide for Claude API Proxy Solutions

Step 1: Get Your API Key

- Visit APIYI (apiyi.com) and register for an account.

- Head over to the console and create an API Key.

- Top up your balance (it supports both Alipay and WeChat).

Step 2: Configure Your Development Environment

Python Environment:

pip install openai

Environment Variable Configuration:

export OPENAI_API_KEY="sk-your-apiyi-key"

export OPENAI_BASE_URL="https://api.apiyi.com/v1"

Step 3: Test the API Connection

import openai

client = openai.OpenAI(

api_key="sk-your-apiyi-key",

base_url="https://api.apiyi.com/v1"

)

# 测试 Claude Sonnet 4

# Test Claude Sonnet 4

response = client.chat.completions.create(

model="claude-sonnet-4-20250514",

messages=[{"role": "user", "content": "你好,请介绍一下你自己"}],

max_tokens=500

)

print(response.choices[0].message.content)

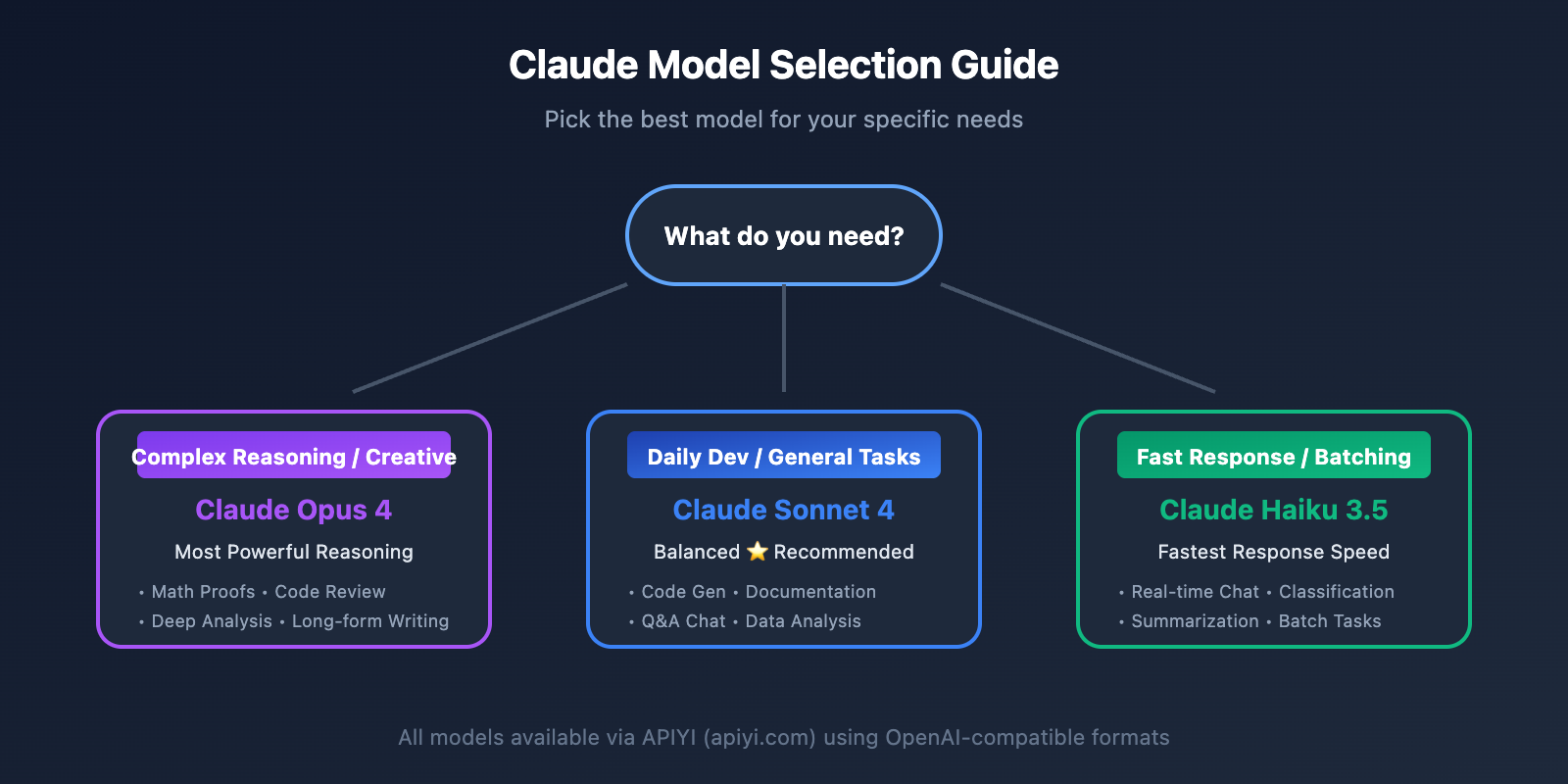

Supported Claude Models

| Model Name | Model ID | Key Features | Available Platforms |

|---|---|---|---|

| Claude Opus 4 | claude-opus-4-20250514 | Strongest reasoning capabilities | APIYI (apiyi.com) |

| Claude Sonnet 4 | claude-sonnet-4-20250514 | Balanced performance and cost | APIYI (apiyi.com) |

| Claude Haiku 3.5 | claude-3-5-haiku-20241022 | Rapid response times | APIYI (apiyi.com) |

| Claude Sonnet 3.5 | claude-3-5-sonnet-20241022 | Classic stable version | APIYI (apiyi.com) |

Detailed Comparison: Official Platform vs. Proxy Solution

| Comparison Metric | Claude Official Platform | API Proxy Solution |

|---|---|---|

| Payment Methods | International Credit Card | Alipay / WeChat |

| Network Access | Requires Proxy/VPN | Direct domestic connection |

| Rate Limiting | Tier-based constraints | Shared high-quota limits |

| Account Risk | Third-party top-ups may lead to bans | Zero risk |

| Feature Support | Full | Full (including caching) |

| Technical Support | English tickets | Chinese-speaking support |

| Billing Model | Pre-payment | Pay-as-you-go |

| Barrier to Entry | High | Low |

FAQ

Q1: Is third-party Claude API top-up really a bad idea?

It's definitely not recommended. Third-party top-up services come with several core issues:

- Account Security: Anthropic detects unusual login and payment behaviors. The risk of getting your account banned with third-party top-ups is extremely high.

- Rate Limiting: Even if the top-up succeeds, Tier 1 limits for new accounts are very strict, making it difficult to use for actual production.

- Financial Risk: Once an account is banned, the topped-up balance usually can't be refunded.

By using legitimate relay services like APIYI (apiyi.com), you can avoid these headaches while enjoying higher quotas and more stable service.

Q2: Do relay services support Prompt Caching?

Yes, they do. APIYI (apiyi.com) fully supports Claude's Prompt Caching features, including:

- 5-minute standard caching

- 1-hour extended caching

- Cache write and hit statistics

- Up to 90% cost savings

The usage is identical to the official API—just add the cache_control parameter to your messages.

Q3: Is the latency of relay services higher than the official API?

In practice, the latency of high-quality relay services is basically on par with the official API, and sometimes even faster. This is because:

- Relay providers deploy optimized nodes across multiple regions.

- Direct connections avoid the extra latency introduced by personal VPNs or proxies.

- High-tier accounts enjoy higher processing priority.

We recommend doing your own testing to choose the solution with the lowest latency for your location.

Q4: How can I ensure data security?

When choosing a legitimate relay service provider, keep these points in mind:

- Check the provider's privacy policy and data processing terms.

- Ensure they support HTTPS encrypted transmission.

- Understand their log retention policy.

- Consider pre-processing sensitive data locally.

APIYI (apiyi.com) guarantees that it doesn't store user conversation data, keeping only the necessary logs for billing purposes.

Q5: How do relay prices compare to official rates?

Relay services are usually priced the same as the official rates or even offer slight discounts. Plus, you save on:

- International credit card transaction fees

- Foreign exchange conversion losses

- Costs for proxy tools

When you factor everything in, the actual cost of using a relay service is often lower.

Summary

Claude API third-party top-ups carry high account risks, strict rate limits, and payment hurdles, making them a poor choice for local developers. A much better alternative is using a legitimate API relay service:

- Easy Payment: Supports local payment methods—no international credit card required.

- High Quotas: Share high-tier limits without worrying about strict rate limiting.

- Full Feature Set: Supports Prompt Caching (both writes and hits).

- Stable & Reliable: Direct connections and technical support in Chinese.

We recommend using APIYI (apiyi.com) for quick access to the Claude API. The platform supports the full range of Claude models, is fully compatible with official features, and you can get integrated in just 5 minutes.

References

-

Anthropic API Billing: Rules for using Claude API prepaid credits

- Link:

support.claude.com/en/articles/8977456-how-do-i-pay-for-my-claude-api-usage

- Link:

-

Claude API Rate Limits: Official Usage Tier documentation

- Link:

docs.claude.com/en/api/rate-limits

- Link:

-

Prompt Caching Documentation: A guide to using the caching feature

- Link:

docs.claude.com/en/docs/build-with-claude/prompt-caching

- Link:

Author: APIYI Team

Technical Support: For help with Claude API integration, please visit APIYI at apiyi.com