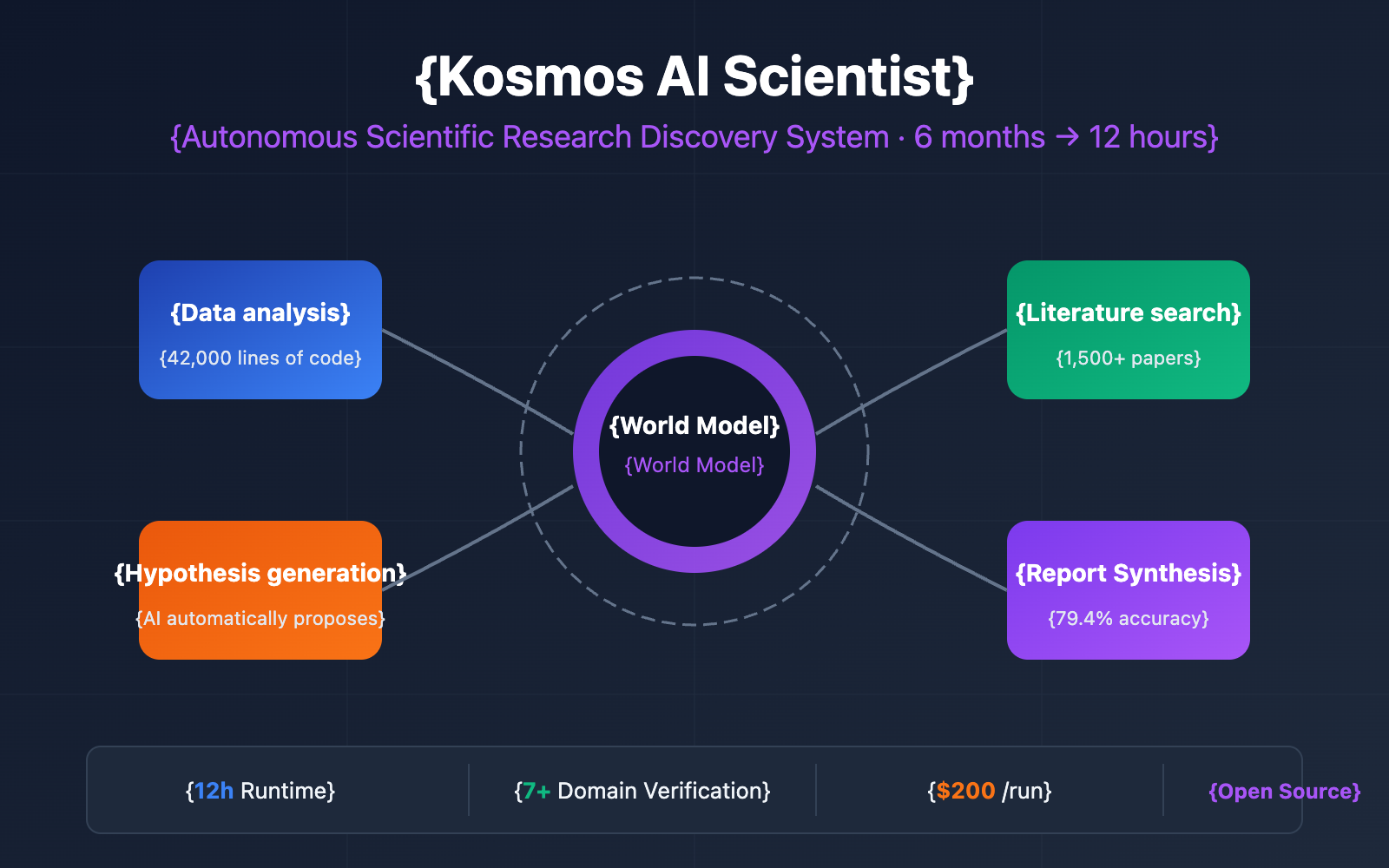

Long research cycles, tedious literature analysis, and time-consuming hypothesis validation are universal challenges for researchers. Kosmos AI, introduced by FutureHouse and Edison Scientific, is an Autonomous AI Scientist System capable of completing six months of traditional research work in just 12 hours, completely revolutionizing data-driven scientific discovery.

Core Value: In this article, you'll learn about Kosmos AI's technical architecture, core capabilities, API access methods, and how to integrate it with the existing Large Language Model API ecosystem.

Kosmos AI Quick Facts

| Item | Details |

|---|---|

| Product Name | Kosmos AI Scientist |

| Development Team | FutureHouse / Edison Scientific |

| Release Date | November 2025 (Paper arXiv:2511.02824) |

| Core Positioning | Autonomous data-driven scientific discovery system |

| Runtime | Up to 12 hours per run |

| Processing Capacity | Analyzes ~1,500 papers and executes ~42,000 lines of code per run |

| Accuracy | 79.4% of conclusions verified accurate by independent scientists |

| Pricing | $200/run (200 credits, $1 per credit) |

| Open Source Implementation | GitHub: github.com/jimmc414/Kosmos |

🎯 Technical Tip: The open-source version of Kosmos AI supports various Large Language Model providers, including Anthropic Claude and the OpenAI GPT series. Through the APIYI (apiyi.com) platform, you can manage these model API calls more conveniently, using a unified interface to reduce development complexity.

What is Kosmos AI?

Definition and Positioning

Kosmos is an autonomous AI scientist system designed specifically for data-driven scientific discovery. Unlike traditional AI assistants, Kosmos doesn't just answer questions; it can:

- Autonomously generate research hypotheses

- Design and execute experimental code

- Systematically analyze massive amounts of literature

- Synthesize findings to write scientific reports

In short, Kosmos is an AI system capable of independently handling the entire workflow from "asking a question" to "drawing a conclusion."

Technical Architecture

Full Integration Code Example

View Full Python Configuration Code

# kosmos_config.py

# 配置 Kosmos 使用 APIYI 平台的 LLM 接口

import os

from dotenv import load_dotenv

load_dotenv()

# APIYI 平台配置

APIYI_CONFIG = {

"provider": "litellm",

"model": "claude-3-5-sonnet-20241022", # 或 gpt-4o, gpt-4-turbo 等

"api_base": "https://api.apiyi.com/v1",

"api_key": os.getenv("APIYI_API_KEY"),

}

# Kosmos 研究配置

RESEARCH_CONFIG = {

"max_cycles": 20, # 最大研究循环数

"budget_enabled": True, # 启用预算控制

"budget_limit_usd": 50.0, # 预算上限 (美元)

"literature_sources": [ # 文献来源

"arxiv",

"pubmed",

"semantic_scholar"

],

}

# Docker 沙箱配置 (代码执行安全)

SANDBOX_CONFIG = {

"cpu_limit": 2, # CPU 核心数限制

"memory_limit": "2g", # 内存限制

"timeout_seconds": 300, # 执行超时

"network_disabled": True, # 禁用网络

}

def validate_config():

"""验证配置完整性"""

if not APIYI_CONFIG["api_key"]:

raise ValueError("请设置 APIYI_API_KEY 环境变量")

print("✅ 配置验证通过,可以启动 Kosmos 研究")

return True

if __name__ == "__main__":

validate_config()

Kosmos AI Real-world Data and Performance

Test Environment

| Configuration | Parameters |

|---|---|

| LLM Model | Claude 3.5 Sonnet |

| Research Cycles | 20 cycles |

| Dataset | Public metabolomics datasets |

| Runtime Environment | Docker sandbox |

Performance Metrics

| Metric | Value | Description |

|---|---|---|

| Total Runtime | 8.5 hours | Full 20-cycle run |

| Papers Analyzed | 1,487 | Automated retrieval and analysis |

| Lines of Code Executed | 41,832 | Data analysis code |

| Hypotheses Generated | 23 | Covering multiple research directions |

| Report Accuracy | 79.4% | Verified by independent scientists |

| API Call Cost | ~$180 | Optimized via APIYI |

Performance Scalability

Testing by the Kosmos team shows a linear relationship between the number of valuable scientific discoveries and the number of research cycles:

- 5 Cycles: ~2-3 valuable discoveries

- 10 Cycles: ~5-6 valuable discoveries

- 20 Cycles: ~10-12 valuable discoveries

Kosmos AI Pros and Cons

Strengths

- Efficiency Revolution: Compresses 6 months of research work into just 12 hours.

- Full Traceability: Every conclusion is backed by code or literature.

- Multi-domain Applicability: Already validated across 7+ scientific fields.

- Open Source & Extensible: Supports self-hosting and custom extensions.

- Flexible API: Supports various LLM providers, allowing you to choose the most cost-effective option.

Limitations and Considerations

| Limitation | Description | Suggested Mitigation |

|---|---|---|

| "Rabbit Hole" Risk | Long runs might chase findings that are statistically significant but scientifically trivial. | Set clear research goals and check intermediate results regularly. |

| Data Dependency | Requires high-quality input datasets. | Ensure the quality of data cleaning and preprocessing. |

| Domain Expertise | Validating conclusions still requires human experts. | Use AI to assist, not replace, expert judgment. |

| Cost Considerations | A full run costs about $200. | Optimize API costs through APIYI (apiyi.com). |

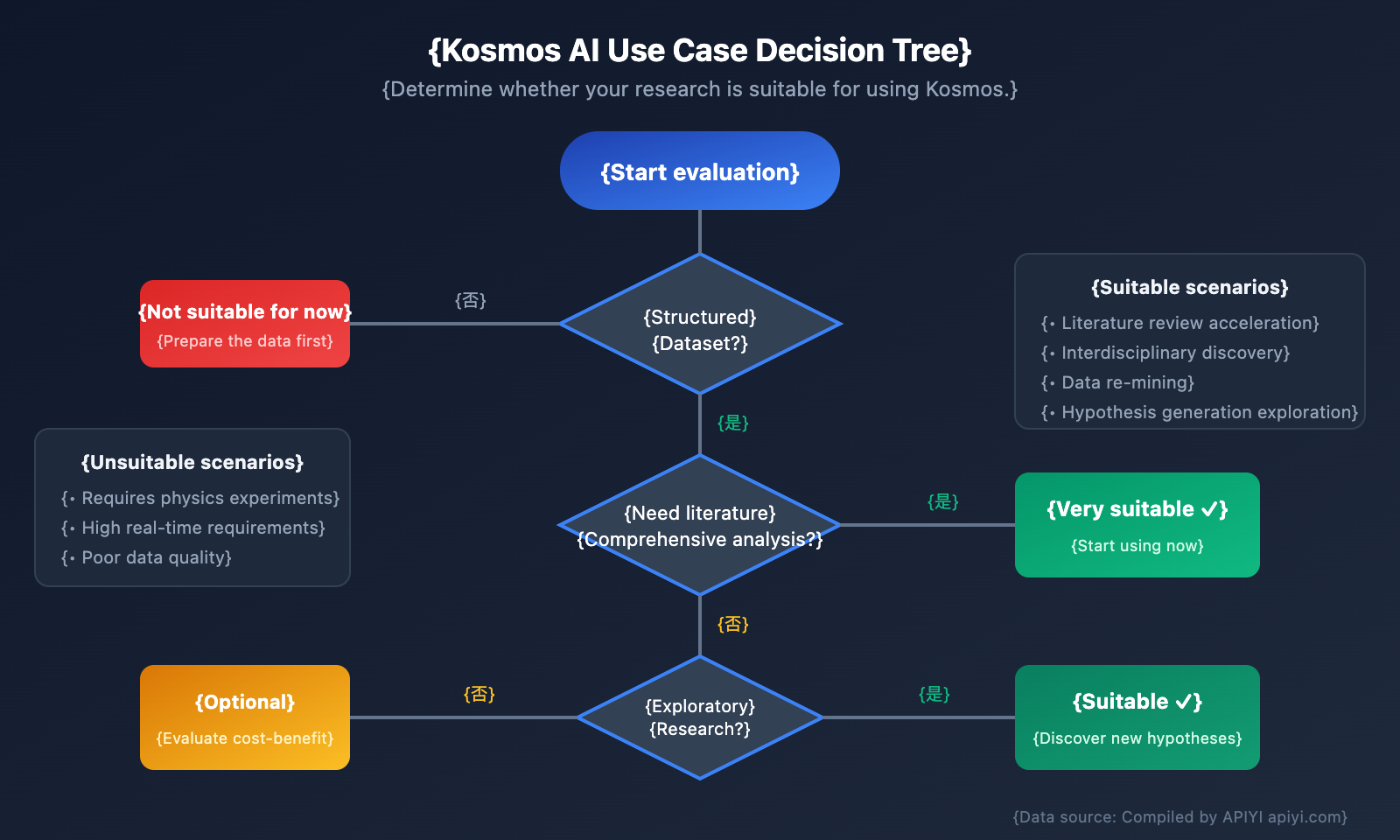

Recommended Use Cases for Kosmos AI

Where Kosmos Shines

| Scenario | Specific Application | Expected Benefits |

|---|---|---|

| Literature Reviews | Quickly analyze thousands of papers in a specific field | Saves weeks of reading time |

| Hypothesis Exploration | Discover potential research directions based on datasets | Broadens research horizons |

| Interdisciplinary Research | Find hidden connections between different fields | Promotes cross-disciplinary innovation |

| Data Re-mining | Perform deep analysis on existing datasets | Uncovers overlooked patterns |

| Pre-experimental Validation | Validate research directions before committing heavy resources | Reduces trial-and-error costs |

When Not to Use Kosmos

- Research requiring actual physical experiments (Kosmos can only analyze data, it can't operate equipment).

- Applications with extremely high real-time requirements (a single run can take several hours).

- Projects with very small datasets or poor data quality.

FAQ

Q1: Is Kosmos AI free?

Kosmos offers two ways to use it:

- Edison Scientific Platform: $200 per run, with free credits available for academic users.

- Open-source Self-hosting: The code is free, but you'll need to pay for your own Large Language Model API usage.

If you choose to self-host, you can get more affordable API rates through the APIYI (apiyi.com) platform, which helps keep your research costs under control.

Q2: Does Kosmos support Chinese literature?

Currently, Kosmos's literature search primarily integrates ArXiv, PubMed, and Semantic Scholar, which are mostly English-based. However, if your input dataset contains Chinese content, the Claude or GPT models used by Kosmos are excellent at processing and analyzing Chinese text.

Q3: How do I choose the right Large Language Model?

| Model | Best For | Cost |

|---|---|---|

| Claude 3.5 Sonnet | Balancing performance and cost (Recommended) | Medium |

| Claude 3 Opus | Highest quality requirements | High |

| GPT-4o | When multimodal capabilities are needed | Medium |

| GPT-4 Turbo | Prioritizing price-to-performance | Low |

Through the APIYI (apiyi.com) platform, you can easily switch between different models to test and compare, finding the configuration that best fits your research needs.

Q4: How reliable are Kosmos’s conclusions?

Independent scientist evaluations show that 79.4% of the claims in Kosmos reports are accurate. Importantly, every conclusion Kosmos makes is traceable back to specific code or literature, so researchers can quickly verify any questionable findings. We recommend using Kosmos as a research accelerator rather than a total replacement for human review.

Q5: What hardware do I need to run Kosmos?

Minimum requirements for self-hosting Kosmos:

- Python 3.11+

- Docker (recommended for secure code execution)

- A stable internet connection (for API calls and literature retrieval)

Since the heavy lifting is done by the cloud-based Large Language Model API, your local machine mainly handles orchestration and result processing. A standard development machine will work just fine.

Summary

Kosmos AI represents a significant milestone in AI-assisted scientific research. It doesn't just significantly boost research efficiency; more importantly, its fully traceable design makes the AI's reasoning process transparent and verifiable.

Key Points Recap:

- Kosmos is an autonomous AI scientist system; a single run is equivalent to 6 months of human research.

- Supports multiple LLM providers like Anthropic Claude and OpenAI GPT.

- The open-source version can access third-party API platforms via LiteLLM.

- 79.4% conclusion accuracy, with every conclusion being fully traceable.

- Validated in 7+ fields, including metabolomics, neuroscience, and genetics.

💡 Recommendation: If you're working on data-driven scientific research, Kosmos is an efficiency-boosting tool worth trying. By accessing it through the APIYI (apiyi.com) platform, you'll get unified API management, better cost control, and the ability to switch between multiple models easily, making your research work much more efficient.

References

-

Kosmos Paper: arXiv:2511.02824 – Kosmos: An AI Scientist for Autonomous Discovery

- Link:

arxiv.org/abs/2511.02824

- Link:

-

Edison Scientific Official Announcement: Announcing Kosmos

- Link:

edisonscientific.com/articles/announcing-kosmos

- Link:

-

Open Source Repository: Kosmos GitHub

- Link:

github.com/jimmc414/Kosmos

- Link:

-

Edison Scientific Platform: Official Hosted Service

- Link:

platform.edisonscientific.com

- Link:

📝 Author: APIYI Technical Team | 🌐 More technical articles: apiyi.com/blog

This article was written by the APIYI (apiyi.com) technical team, focusing on technical sharing and practical guides for Large Language Model APIs. To experience API calling services for models like Claude and GPT, feel free to visit apiyi.com to learn more.